RethinkDB 1.5: secondary indexes, batched inserts performance improvements, soft durability mode

We are pleased to announce RethinkDB 1.5 ( The Graduate), so go download it now!

This release includes the long-awaited support for secondary indexes, a new algorithm for batched inserts that results in an ~18x performance improvement, support for soft durability (don’t worry – off by default), and over 180 bug fixes, features, and enhancements.

Upgrading to RethinkDB 1.5? Make sure to migrate your data before upgrading to RethinkDB 1.5.

Secondary indexes

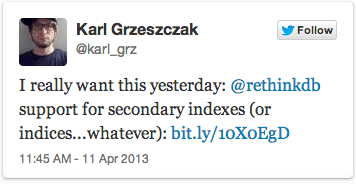

Support for secondary indexes has been the most requested feature since we launched RethinkDB, and has been in development for over six months. It required a massive amount of server work and involved modifying almost every part of the codebase: the BTree code, the concurrency subsystem, the distribution layer, the query language, the client drivers, and even the web UI.

We worked hard to make sure secondary indexes are extremely easy to use. Here’s

how you’d create a secondary index on the last_name attribute:

r.table('users').indexCreate('last_name')

Then getting all users with the last name Smith would be:

r.table('users').getAll('Smith', { index: 'last_name' })

Or you could retrieve arbitrary ranges of the index. For example, all users whose last names are between Smith and Wade:

r.table('users').between('Smith', 'Wade', { index: 'last_name' })

Listing and dropping indexes is also a part of the API:

r.table('users').indexList() // list indexes on table 'users'

r.table('users').indexDrop('last_name') // drop index 'last_name' on table 'users'

In addition to manipulating secondary indexes via ReQL, you can perform these from the admin UI:

You can define compound indexes, and even indexes based on arbitrary ReQL expressions. Secondary indexes can be used to do efficient table joins, and are sharded and replicated along with the rest of the database. Learn more about how to use secondary indexes in the documentation.

Batched inserts performance improvements

Since the initial release, RethinkDB has supported inserting batches of documents. Instead of inserting multiple documents one at a time:

r.table('users').insert({ name: 'Michael' })

r.table('users').insert({ name: 'Bill' })

It was always possible to insert a batch of documents in one command with a single network roundtrip:

r.table('users').insert([{ name: 'Michael' },

{ name: 'Bill' }])

However, the server had always executed batched inserts by translating them to a series of single insert commands. While sending the data in a single network roundtrip reduced the network latency, it still had very poor performance because the server would have to flush each document to disk before moving on to the next document.

The 1.5 release includes a new insert algorithm that flushes changes to disk in batches while maintaining the guarantee of consistency in case of power failure. This algorithm drastically improves performance of batched inserts. While not something we’d call a benchmark, inserting 100 medium-sized documents went from 2.8 seconds to 160 milliseconds on a development system, and the ~18x performance improvement is consistently reproducible on different batched insert workloads and types of hardware.

Soft durability mode

Early in the development of RethinkDB, we made the design decision to be extremely conservative about durability and safety of users’s data. In this respect RethinkDB is like any other traditional database system – the server does not acknowledge the write until it’s safely committed to disk. While this is a sensible default for a database system, many of our users pointed out that they’re using RethinkDB for storing access logs or clickstream data, or as a persistent, replicated cache of JSON documents. These scenarios might require trading off some durability guarantees for higher performance.

The 1.5 release includes support for relaxed durability (off by default, of course). For tables in this mode, the server acknowledges writes as soon as it receives them, and flushes data to disk in the background. Flushes normally occur every few seconds, or if there are too many dirty blocks cached in memory.

Hard durability can be turned off when creating a table via the advanced settings in the web UI or in ReQL:

db.tableCreate('http_logs', { hard_durability: false })

You’ll notice that write performance on tables with hard durability turned off

is about ~30x faster than normal tables. Note that the data still gets flushed

to disk in the background and is consistent in case of failures. For those

familiar with MySQL’s InnoDB engine, RethinkDB’s soft durability is similar to

setting InnoDB’s innodb_flush_log_at_trx_commit to 0 (more info on this

setting in InnoDB).

You can change the durability mode for an existing table via the admin CLI:

$ rethinkdb admin -j localhost:29015

localhost:29015> set durability http_logs --hard

Many more enhancements

The 1.5 release includes many more enhancements – check out the changelog for a complete list. Here are a few more notable changes:

- For super-fast insert performance, RethinkDB now supports noreply writes that allow writing data over the network without waiting for a server acknowledgment. This mode allows for an apples-to-apples benchmark comparison with MongoDB.

- The Data Explorer in the web UI now supports electric punctuation, as well as a toggle to turn it off. This can make typing queries in the data explorer much more pleasant.

- The web UI will now check if there is a new version of RethinkDB and inform you if you need to upgrade (the check is done as a simple AJAX request from the browser, and can be turned off).

Looking forward to 1.6

We are already hard at work on the 1.6 release. This release should be quick and easy, and will include many ReQL enhancements. We will also focus on continuously improving performance with each version. However, the next release isn’t set in stone. If a feature is important to you, let us know.

Slava Akhmechet

Slava Akhmechet